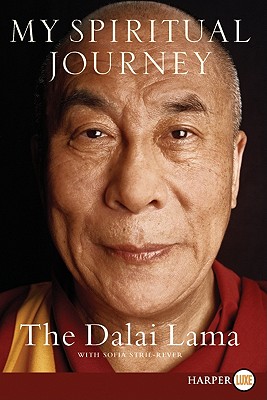

As I mentioned in my previous posting, I’m reading the Dalai Lama’s My Spiritual Journey. Actually, I’m reading it for the second time and I will probably read it again at least once more. It’s not hard to understand. It’s well written. Ninety percent of what the Dalai Lama writes I understand perfectly and I agree with completely. What throws me for a loop is when he writes, “Phenomena, which manifest to our faculties of perception, have no ultimate reality.”

Is the Dalai Lama speaking metaphorically? I don’t think so; though apparently there are two schools of Buddhist thought on the subject of reality (see here).

This is especially confusing for me because I am a scientist. There are days that I have a hard time believing this, myself, but I’ve hung my diplomas in my office so whenever I have a moment of self-doubt I can gaze at the expensively framed certificates. The signatures look real. I doubt if the President of the Board of Regents signs the undergrad degrees but his signature on my doctorate looks pretty legit.

My doctoral research, and the research that I do now, involves unsupervised machine learning (UML) and it is very much tied to reality; or at least our perceptions of reality. Curiously, the early research in what would become UML was conducted by psychologists who were interested in visual perception and how the human brain categorizes perceived objects.

So, let’s proceed as if this universe and everything in it are objects that can be described. Our perceptions may be completely wrong – they may have no ultimate reality – but I know the technique of unsupervised machine learning works; at least in this illusion that we call ‘reality’.

For a machine to make intelligent decisions about the world it has to understand the world. It has to grok the world.

![]() Key concept: The world is made up of objects. Objects are described by attributes.

Key concept: The world is made up of objects. Objects are described by attributes.

Therefore, the world can be described and the world can be understood within the context of a previously observed similar situation(s). In essence, the machine says, “I can categorize this new situation. I have seen something similar (or many similar things) before. I can put this new situation in the context of previously observed things. This is what happened previously. These are the things that I need to be aware of. This is what I need to look at now.”

So, let’s talk about objects and attributes.

Let’s start by selecting some random object on our desk. Okay, a coffee cup. What attributes can we use to describe the coffee cup object? Well, it’s a cylinder, closed at one end and open at the other and it has height and a radius and weight and a handle. It also has a cartoon on it. It also has some fruit juice in it. So we can describe the object with these attributes. However, some of these attributes are very important while others (the fact that the coffee cup contains fruit juice or that it has a cartoon on it) are irrelevant to the object being a coffee cup.

Now let’s look at some other random objects on my desk. I’ve got five plastic paper clip containers on it. They are also cylinders, closed at one end and open at the other, but none of them have handles. Also on my desk there are a couple of cylinders closed at both ends that are plastic barrels of Juicy Fruit gum.

So, if we were to take these eight objects found on my desk (yes, I have a very large desk) and start ‘feeding’ them into our machine we would end up with three ‘clusters’ of objects: one cluster would contain five objects described as cylinders, closed at one end and open on one end, one cluster would contain two objects described as cylinders closed at both ends and one cluster would contain one object described as a cylinder closed at one end, open at the other end and with a handle.

Now I just went and got a cup of coffee and put it on my desk. The machine asks itself (and we’ll get into how this is done in the next installment) does this new object belong with the paper clip holders? No, it has a handle. Does it belong with the Juicy Fruit gum barrels? No, one end of the cylinder is open and it has a handle. The machine then compares it with the other ‘coffee cup’ object and sees that they’re very similar and places the new object with the previously observed and categorized coffee cup. Voilà!

How does the machine ‘see’ where to place new objects? It involves a category utility function and this will be the subject of the next blog post.

How do you know which attributes are important for classification and which are irrelevant (like the cartoon on the coffee cup)? This involves humans; specifically Subject Matter Experts (SMEs). In my doctoral research I showed that it is crucial to include SMEs throughout the development process. We conduct blind surveys with SMEs to determine:

- If there is a consensus among the SMEs that specific objects can be defined by specific attributes.

- What those attributes are.

- To validate algorithms that return ‘real world values’ that describe these attributes.

- To validate the machine’s output.

Algorithms that describe attributes must return ‘real world values’ which is just a fancy way of saying numbers with a decimal point. For example, an algorithm that returns a value for ‘number of closed ends of a cylinder’ would return either 0, 1.0 or 2.0. And an algorithm that returns a value for ‘number of handles’ would return 0, 1.0, 2.0, 3.0… What, you say, a three handled cup? Yup, such beasts exist (see picture at the right).

Okay, the next episode involves some math. So first have a lie down and think cool thoughts until the panic subsides.