Many years ago, when I was an undergrad, I had to take a religion course. This was something that I flat out refused to do and I discovered, as I so often do, a loophole in the system ripe for exploitation: certain philosophy courses counted as a ‘religion credit’ and one of these, Philosophical Cybernetics, was being offered that semester.

This class had two titles; the philosophical one and Introduction to Artificial Intelligence. Same class, same professor, same credits, but depending on how you signed up for it, it would count as the required religion class.

I haven’t thought about the phrase, “Philosophical Cybernetics” in a long time. Because the professor (who shall remain nameless for reasons soon to be obvious) used the terms ‘philosophical cybernetics’ and ‘artificial intelligence’ interchangeably I always assumed that they were tautological equivalents. It’s a good thing that I checked before writing today’s blog because, like a lot of things, this professor was wrong about this, too.

Today I learned (from http://www.pangaro.com/published/cyber-macmillan.html):

Artificial Intelligence and cybernetics: Aren’t they the same thing? Or, isn’t one about computers and the other about robots? The answer to these questions is emphatically, No.

Researchers in Artificial Intelligence (AI) use computer technology to build intelligent machines; they consider implementation (that is, working examples) as the most important result. Practitioners of cybernetics use models of organizations, feedback, goals, and conversation to understand the capacity and limits of any system (technological, biological, or social); they consider powerful descriptions as the most important result.

The professor that taught Philosophical Cybernetics had a doctorate in philosophy and he freely admitted on the first day of class that he didn’t know anything about AI and that, “we were all going to learn this together.” I actually learned quite about AI that semester; though obviously little of it was in that class.

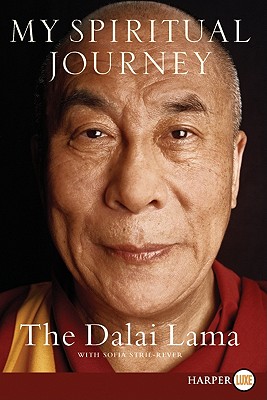

My Spiritual Journey by H. H. The XIV Dalai Lama

Anyway, the whole point of titling today’s blog as, “Philosophical Cybernetics” was going to be this clever word play on the philosophy of AI. This has come about because I’ve been reading, “My Spiritual Journey” by the Dalai Lama and I’ve been thinking about what I do for a living and how it relates to an altruistic, compassionate and interconnected world. Short answer: it doesn’t.

However, it did get me thinking about the power of AI and – hold on to your hats because this is what you came here to read – how AI will eventually kick a human’s ass in every conceivable game and subject. This event – the day when computers are ‘smarter’ than humans – is commonly referred to as the Kurzweil Singularity and the Wiki link about it is: http://en.wikipedia.org/wiki/Technological_singularity .

Let’s backtrack for a second about Ray Kurzweil. He pretty much invented OCR (Optical Character Recognition) and, as I understand it, made a ton of money selling it to IBM. Then he invented the Kurzweil digital keyboard. This was the first digital keyboard I ever encountered and I can’t tell you how wonderful it was and how, eventually, digital keyboards gave me a new lease on playing piano.

Here are some links to me playing digital keyboards (I actually play an Oberheim, not a Kurzweil, but Kurzweil created most of the technology):

Nicky (an homage to Nicky Hopkins)

Boom, boom, boom! Live with Mojo Rising

Looking Dangerous (with Jerry Brewer)

Old 65

A boogie (with Jason Stuart)

When I first heard Ray Kurzweil talk about ‘The Singularity’ I remember him saying that it was going to happen during his lifetime. Well, Ray is six years older than me and my response was, “that’s not likely unless he lives to be about 115.”

NEWSFLASH: Well, this is embarrassing, Ray Kurzweil was just on the Bill Maher Show (AKA Real Time with Bill Maher) last night and I vowed that this blog would never be topical or up to date). Anyway Kurzweil did clarify a couple of important issues:

NEWSFLASH: Well, this is embarrassing, Ray Kurzweil was just on the Bill Maher Show (AKA Real Time with Bill Maher) last night and I vowed that this blog would never be topical or up to date). Anyway Kurzweil did clarify a couple of important issues:

- Kurzweil was going to live practically forever (I can’t remember if it was him or Maher that used the phrase ‘immortal’) and he takes 150 pills a day to achieve this goal. So, I’m thinking, “well, this explains how he expects the Singularity to happen during his lifetime; he’s going to live for thousands of years!” And then he drops this:

- The Singularity will occur by 2029!

I think Ray Kurzweil is a brilliant guy but I am dubious that the Singularity will occur by 2029 much less during my lifetime. I would like to live as long as Ray Kurzweil thinks he’s going to live but the actuarial tables aren’t taking bets on me after another 20 years or so.

Alan Turing, the most brilliant mind of the 21st century.

Alan Turing, in my opinion, had the most brilliant mind of the 20th century. He is one of my heroes. He also wrote the following in 1950:

“I believe that in about fifty years’ time it will be possible, to programme computers, with a storage capacity of about [10^9 bytes], to make them play the imitation game so well that an average interrogator will not have more than 70 per cent chance of making the right identification after five minutes of questioning. The original question, “Can machines think?” I believe to be too meaningless to deserve discussion. Nevertheless I believe that at the end of the century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted.” (Emphasis added)

- Turing, A.M. (1950).

Computing machinery and intelligence. Mind, 59, 433-460.

Okay, so my hero, Alan Turing got the whole ‘computers will be intelligent’ within 50 years completely wrong. I think that Kurzweil’s prediction of it occurring within 18 years to be just as unlikely.

But I do think that AI will eventually be everything that Turing and Kurzweil imagined. When do I think this will happen? I dunno, let’s say another 50 years, maybe longer; either way it will be after I have shuffled off this mortal coil. I like to think that my current research will play a part in this happening. It is my opinion that the Kurzweil Singularity, a computer passing Turing’s Test, or a computer displaying, “human level intelligence” will not occur without unsupervised machine learning. Machine learning is absolutely crucial for AI to achieve the level of results that we want. ‘Supervised’ machine learning is good for some simple parlor tricks, like suggesting songs or movies, but it doesn’t actually increase a computer’s ‘wisdom’.

The last subject that I wanted to briefly touch upon was why, ultimately, AI will kick a human’s ass in any game: it’s because AI has no compassion, doubt, hesitation and it doesn’t make mistakes. I suppose you could program AI to have compassion, doubt, hesitation, and make mistakes, but it would certainly be more trouble than it’s worth.

So, someday computer AI will be the greatest baseball manager of all time. I look forward to this day. I hope I live long enough to see that day. Because that day the Chicago Cubs will finally win the World Series.

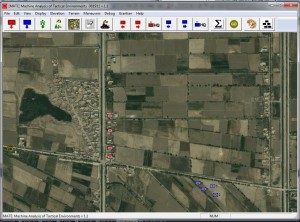

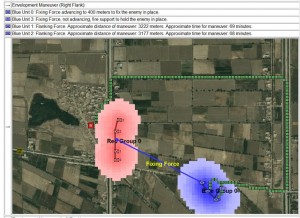

In addition to enhanced military training, researchers also want to use the new program to develop enhanced technology. Ezra Sidran, a computer scientist, said he planned to apply for the naval research funding to investigate “unsupervised machine learning,” in which a computer program independently “analyzes, remembers and learns.”